I was messing around with IBM’s Alchemy API, a web service that analyzes sentiment for a piece of text. You run some text through it, and it scores the text on a scale of -1 to 1 according to the sentiment or mood of the statement. The scores range from positive 1 for highly positive statements, down to negative 1 for strongly negative verbiage.

I was getting pretty predictable results at first when I tested out what Alchemy thought of statements like “I hate you!”:

!curl http://access.alchemyapi.com/calls/text/TextGetTextSentiment \ -d outputMode=json \ -d apikey=[FIND YOUR OWN DAMN KEY] \ -d text="I hate you!" | jq .

I mean, it’s kind of a jerky thing to say, and Alchemy seemed to agree based on the results:

“docSentiment”: {

“type”: “negative“,

“score”: “-0.812109”

It was pretty good about scoring the opposite statement, “I love you!”:

!curl http://access.alchemyapi.com/calls/text/TextGetTextSentiment \ -d outputMode=json \ -d apikey=[FIND YOUR OWN DAMN KEY] \ -d text="I love you!" | jq .

Results for “I love you!”:

“docSentiment”: {

“type”: “positive“,

“score”: “0.811401”

I noticed that the two statements being opposite actually had almost equivalent absolute values, which was nice.

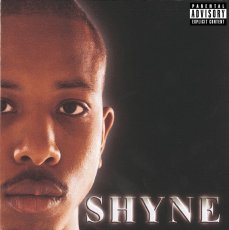

But things took a dark turn when I tried to run the hook of my favorite rap song by Shyne through the service…

!curl http://access.alchemyapi.com/calls/text/TextGetTextSentiment \ -d outputMode=json \ -d apikey=[FIND YOUR OWN DAMN KEY] \ -d text="Thas gangsta" | jq .

The results for “Thas gangsta” were completely misconstrued by Alchemy:

“docSentiment”: {

“type”: “negative“,

“score”: “-0.235614”

Methinks someone needs to explain to the Alchemists that while “gangster” refers to the violent criminality associated with the underground economy, the term “gangSTA” connotes strength, glamour, wealth, and community, all highly positive concepts.

Alas, another example of how stupid computers can be.